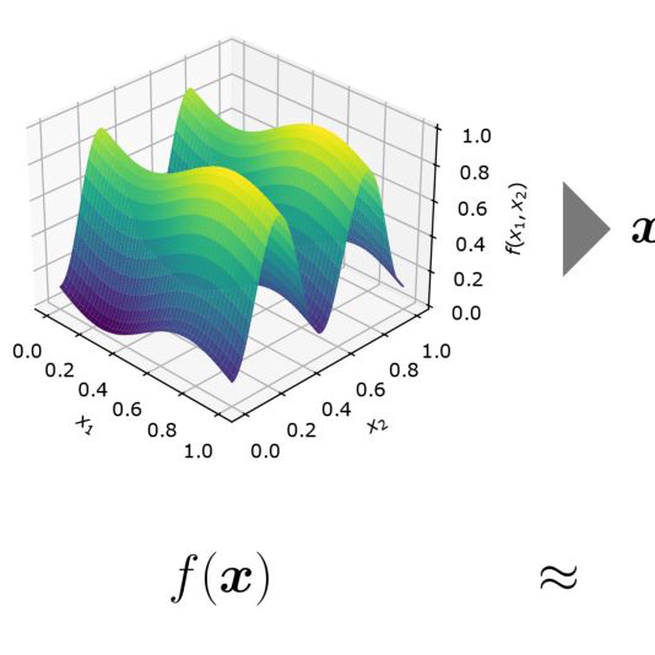

The analysis of variance (ANOVA) decomposition offers a systematic method to understand the interaction effects that contribute to a specific decision output. In this paper we introduce Neural-ANOVA, an approach to decompose neural networks into the sum of lower-order models using the functional ANOVA decomposition. Our approach formulates a learning problem, which enables fast analytical evaluation of integrals over subspaces that appear in the calculation of the ANOVA decomposition. Finally, we conduct numerical experiments to provide insights into the approximation properties compared to other regression approaches from the literature.

Aug 2, 2025

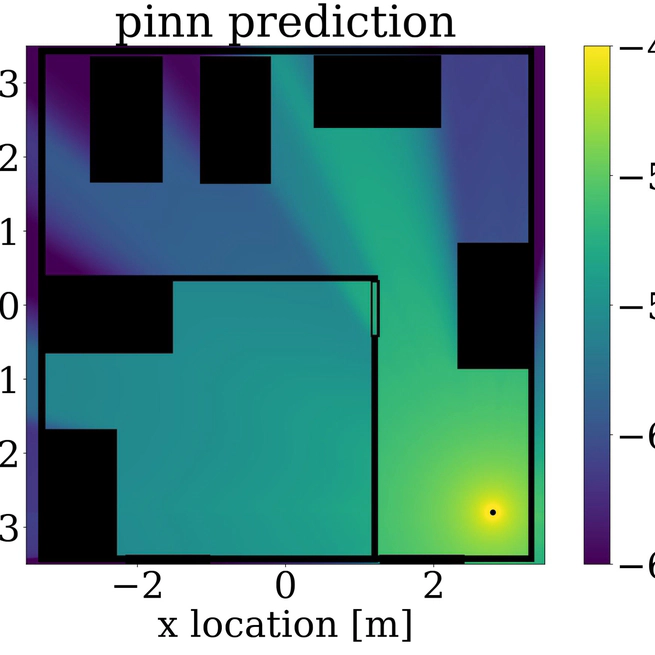

This paper presents a novel physics-informed machine learning method for pathloss prediction that significantly enhances generalization and prediction accuracy. The approach uniquely integrates both the inherent physical relationships within the spatial loss field and empirical pathloss measurements directly into the neural network's training process. This dual-constraint learning problem enables the model to achieve superior performance with fewer layers and parameters, resulting in exceptionally fast inference times crucial for subsequent applications like localization. Furthermore, the physics-informed framework substantially reduces the need for extensive training data, making this method highly adaptable and practical for diverse real-world pathloss prediction challenges.

Dec 14, 2023

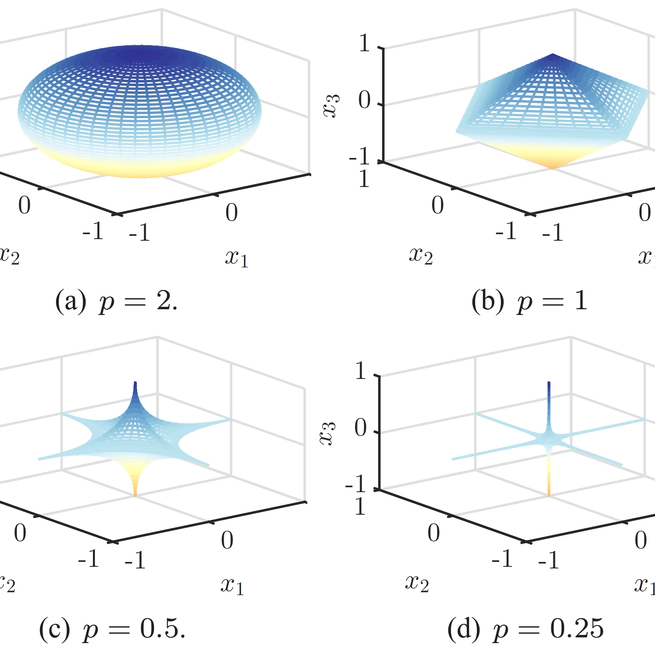

This paper investigates machine learning techniques to achieve low-latency approximate solutions for inverse problems, specifically focusing on recovering sparse stochastic signals within lp-balls using a probabilistic framework. The authors analyze the Bayesian mean-square-error (MSE) for two estimators. a linear one, and a structured nonlinear one comprising a linear operator followed by a Cartesian product of univariate nonlinear mappings. Crucially, the proposed nonlinear estimator maintains comparable complexity to its linear counterpart due to the efficient hardware implementation of the nonlinear mapping via look-up tables (LUTs). This structure is well-suited for neural networks and single-iterate shrinkage/thresholding algorithms, and an alternating minimization technique yields optimized operators and mappings that converge in MSE, making it highly appealing for real-time applications where traditional iterative optimization is infeasible.

Sep 5, 2016

This paper presents a new algorithm for approximating multivariate functions using nomographic functions, which consist of a one-dimensional continuous and monotone outer function applied to a sum of univariate continuous inner functions. The core of the method involves solving a cone-constrained Rayleigh-Quotient optimization problem, drawing upon Analysis of Variance (ANOVA) for dimension-wise function decomposition and optimization over monotone polynomials. The utility of this algorithm is demonstrated through an example showcasing its application in distributed function computation over multiple-access channels.

Jul 13, 2015